AI has become part of daily life. It’s behind chatbots, smart assistants, writing tools, and more. But the same technology that helps you draft emails or organize your calendar is now being used to pull off scams — and it’s working. These aren’t sloppy messages with broken grammar or strange phrasing. AI-generated scams are polished, quick, and increasingly hard to detect.

The average person may not know it, but criminals are using AI tools to copy voices, write convincing messages, and even generate fake identities. These scams are cheap to launch and reach thousands within minutes. It’s no longer about spotting errors — it’s about spotting patterns.

AI scams rely on speed, scale, and realism. What used to take days of setup and scripting can now be created in minutes. Voice models, image generators, and text-based AI can automate the work scammers once had to do manually.

One example is voice cloning. If someone posts a voice memo online, even briefly, a scammer can grab that sample and feed it into a tool that mimics tone, pacing, and accent. Victims report getting calls that sound exactly like their spouse or child, begging for help or money. These calls sound urgent — and real — which is why they work.

Phishing emails have also been upgraded. Many AI scams use bots to scrape your online presence and tailor messages just for you. If you just posted about an online order, you might get a message from a “delivery service” asking you to confirm payment. The AI writes these emails with perfect grammar and realistic structure. They don’t trip spam filters and don’t look suspicious on the surface.

Fake chat support is another method. A person clicks a help link or searches for a brand’s support page, but instead of reaching the real site, they land on a cloned version. An AI-powered chatbot greets them, behaves naturally, and asks for login details or payment. The user thinks they’re resolving an issue, but they’re handing over sensitive data.

There’s also the issue of fake faces and profiles. Scammers create new social media accounts using AI-generated photos of people who don’t exist. These profiles often seem harmless — a coworker, a recruiter, or someone looking to connect — but once trust is built, they ask for money, access, or information.

In the past, scams had flaws. The language felt off, the formatting was messy, or the request seemed strange. Now, with AI involved, those signs are disappearing. The writing sounds natural. The photos look real. The voices are nearly perfect.

AI doesn’t just mimic people — it learns from interaction. Some scammers use chat models that respond in real-time and shift tone depending on how you reply. If you seem hesitant, the AI might become more friendly or insistent. This adaptability makes it harder to tell if you're speaking to a real person or a scammer using AI tools.

Another reason AI fraud is harder to detect is personalization. Many scammers gather bits of data from social media, forums, and leaks. AI then weaves that data into convincing narratives. You may get a message that mentions a recent event in your life or uses a familiar nickname. These small touches build trust quickly.

There’s also scale to consider. AI lets scammers run hundreds or thousands of scams at once. They can test different messages, see which versions get more responses, and update the rest in real time. That means even if one version of the scam doesn’t fool you, the next might be more refined.

You can’t stop AI scams from existing, but you can make yourself harder to trick. The most reliable defense is hesitation — stepping back for a moment before clicking or replying.

If someone calls asking for urgent action, verify their identity through another channel. Don’t rely on voice alone. For emails and messages, examine them closely. If something feels slightly off, it probably is. Some AI-written messages still overuse certain phrases or mimic customer service too perfectly.

Be cautious with links, especially from unknown senders. If you're trying to reach support, go directly to the company’s website instead of clicking links from search results or ads, which are often used in fake support scams.

Limit how much personal information you share publicly. Posts about your travel plans, family, or even pets can all be used in targeted scams. The less data available, the less material AI scammers have to work with.

You should also update your security habits. Use two-factor authentication wherever possible. Keep your apps and devices updated. And report anything suspicious. Most platforms take reports seriously and can remove fake accounts or warn others.

Finally, don’t rush. AI scams often rely on urgency. Taking just a minute to question something suspicious can be the difference between being safe and being scammed.

AI fraud is not just about a few isolated incidents. It’s a growing problem that blends automation with manipulation. And as AI tools become easier to access, the number of people using them for scams will only increase.

At the same time, efforts to detect AI-generated content are still catching up. Some tools can now identify cloned voices or fake images, but they aren’t widespread or perfect. Until then, individual awareness plays a large role in slowing the spread of AI scams.

The technology behind these scams isn’t bad in itself. It’s being used for bad outcomes. AI isn’t the scammer — it’s just the tool. That’s why knowing how it works matters.

AI scams are no longer an abstract concern or limited to high-level fraud. They’re part of everyday life now — showing up in phone calls, emails, texts, and job offers. What makes them effective is their believability, thanks to AI’s ability to mimic human speech, writing, and appearance. While the methods have changed, your defense remains the same: stay alert, question what feels off, and don’t trust urgency over clarity. The more you understand how AI fraud works, the less likely you are to fall for it. Trust your judgment. That’s something AI can’t copy.

How AI with multiple personalities enables systems to adapt behaviors across user roles and tasks

Effective AI governance ensures fairness and safety by defining clear thresholds, tracking performance, and fostering continuous improvement.

Explore the truth behind AI hallucination and how artificial intelligence generates believable but false information

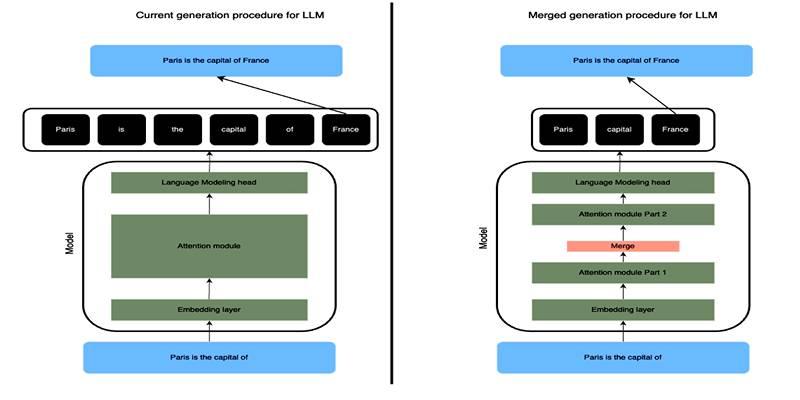

Learn how SLERP token merging trims long prompts, speeds LLM inference, and keeps output meaning stable and clean.

How to approach AI trends strategically, overcome FOMO, and turn artificial intelligence into a tool for growth and success.

Explore how Keras 3 simplifies AI/ML development with seamless integration across TensorFlow, JAX, and PyTorch for flexible, scalable modeling.

Craft advanced machine learning models with the Functional API and unlock the potential of flexible, graph-like structures.

How to avoid common pitfalls in data strategy and leverage actionable insights to drive real business transformation.

How neural networks revolutionize time-series data imputation, tackling challenges in missing data with advanced, adaptable strategies.

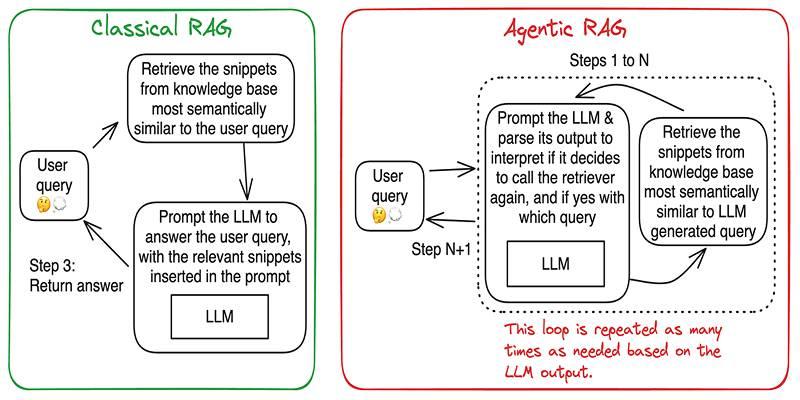

Build accurate, explainable answers by coordinating planner, retriever, writer, and checker agents with tight tool control.

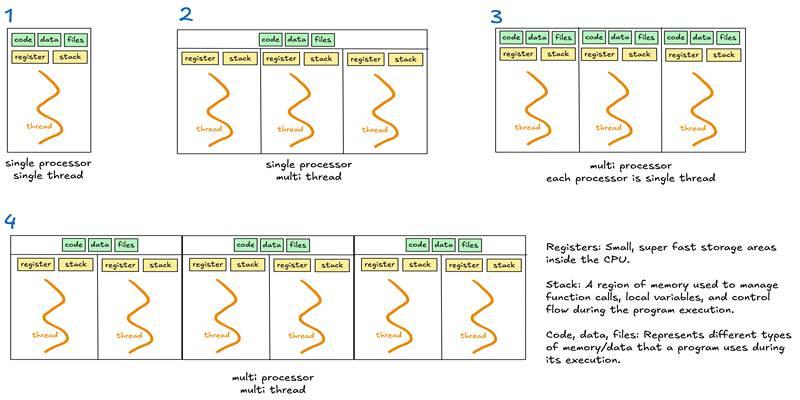

Learn when to use threads, processes, or asyncio to handle I/O waits, CPU tasks, and concurrency in real-world code.

Discover DeepSeek’s R1 training process in simple steps. Learn its methods, applications, and benefits in AI development