Artificial intelligence can now write essays, compose music, diagnose diseases, and hold conversations that feel almost real. Yet sometimes it says things that sound entirely made up. It might invent facts, quote nonexistent studies, or confidently describe people who never existed. These moments are called AI hallucinations, and while they're errors in logic or data, they also reveal something deeper about how both machines and humans interpret reality. When we talk about AI hallucinating, we're not only questioning the machine's truthfulness; we're also confronting how our own perceptions can bend around belief and bias.

AI hallucination happens when a model generates information that appears plausible but is false or unverified. It doesn’t lie intentionally; it simply fills in gaps using patterns from data. Unlike a search engine that retrieves exact results, generative models predict what words or images are likely to come next. When there’s missing or ambiguous information, they make educated guesses. Those guesses can occasionally sound convincing enough to pass as fact.

For instance, an AI asked about a scientific paper might fabricate a study with a real author’s name and an invented title. This is not a glitch but a reflection of how the model operates—it’s designed to produce coherent responses, not to verify truth. The same happens in image generation, where an AI might create objects that never existed in the original prompt context. In short, AI doesn’t know what’s real; it only knows what sounds real according to its training data.

This distinction matters because human users often mistake fluency for accuracy. When a sentence sounds right, we instinctively trust it. That’s where the line blurs between machine error and human assumption.

Before blaming machines, it helps to remember that people hallucinate, too, just differently. In psychology, hallucination is a sensory perception without an external cause. But in daily life, our brains constantly fill in blanks. Optical illusions, false memories, and misheard words are all forms of mental interpolation. We construct meaning from fragments and context, not pure facts.

Think about eyewitness accounts. Two people can see the same event and describe it differently; each is convinced they're correct. Our minds piece together stories based on expectation and prior experience, just like an AI model filling in gaps from patterns it’s learned. In that sense, AI hallucinations mimic the human habit of constructing reality from partial information.

This raises an uncomfortable question: if both humans and AI build plausible but occasionally false narratives, who’s really hallucinating the tool or its user? When we read AI-generated content, our interpretations add another layer of distortion. If we project our assumptions onto what the system produces, we become part of the hallucination loop.

Hallucinations stem from three main factors: imperfect data, probabilistic prediction, and lack of real-world grounding. Most large language models are trained on vast public datasets that contain both truth and misinformation. Because the model doesn’t inherently distinguish between the two, it replicates patterns of both accuracy and error.

Second, AI doesn’t reason in the traditional sense. It doesn’t know anything; it calculates probabilities. When asked a question, it estimates what sequence of words is most statistically likely to follow, based on its training. If a question falls outside its data range or involves ambiguous phrasing, the model fills in the gap with the most probable but not necessarily factual response.

Third, AI lacks real-time verification. It can’t browse the internet unless specifically connected to a live data source. So if you ask about something that changed after its last update, it may confidently invent an outdated or wrong answer.

The implications stretch beyond trivia. In law, healthcare, and education, misinformation can have serious consequences. A chatbot suggesting fake legal precedents or citing fabricated medical sources can mislead professionals who rely on its fluency. Recognizing hallucination isn't only about technical improvement; it's about maintaining responsibility in human judgment.

The phrase “AI hallucination” may sound poetic, but it points to a practical truth: artificial intelligence doesn’t know what’s real until we teach it how to measure truth. And even then, the process depends on human oversight. Machines generate, but humans interpret.

Avoiding AI hallucination isn’t just a task for engineers; it’s a cognitive discipline for users. The most reliable defense is skepticism, questioning fluency, checking sources, and treating every generated claim as provisional. Transparency in AI design helps too. When users understand how a system produces responses, they’re less likely to misread its confidence as certainty.

Developers now use “truth models” or fact-checking layers that cross-verify output with trusted databases. Others train models with reinforcement, learning from human feedback, guiding them toward more grounded answers. Yet no model is immune, because language itself is ambiguous. Even the best system mirrors the biases and assumptions of its training data, which come from us.

The irony is that AI’s hallucinations may help us see our own. They expose how easily humans equate confidence with truth and how fragile our perception of accuracy can be. When a chatbot invents a false citation, it reflects our own mental shortcuts—the way we fill in blanks, trust tone over substance, and mistake probability for proof.

So perhaps the better question isn’t whether AI hallucinates, but whether we understand our own role in interpreting its visions.

AI hallucinations aren't signs of awareness or malfunction; they're the natural result of models trained on flawed or incomplete data. Still, they challenge how we understand accuracy, bias, and belief. Each AI-generated error reveals not just a system's limits but our own blurred lines between fact and fiction. These models don't believe anything; they predict patterns. But we often interpret those predictions as truth. The unsettling part isn't that machines make things up, but that we often trust them without question. When AI gives you a wrong answer, it may be highlighting something deeper: our willingness to believe what sounds right, even when it isn't.

How AI with multiple personalities enables systems to adapt behaviors across user roles and tasks

Effective AI governance ensures fairness and safety by defining clear thresholds, tracking performance, and fostering continuous improvement.

Explore the truth behind AI hallucination and how artificial intelligence generates believable but false information

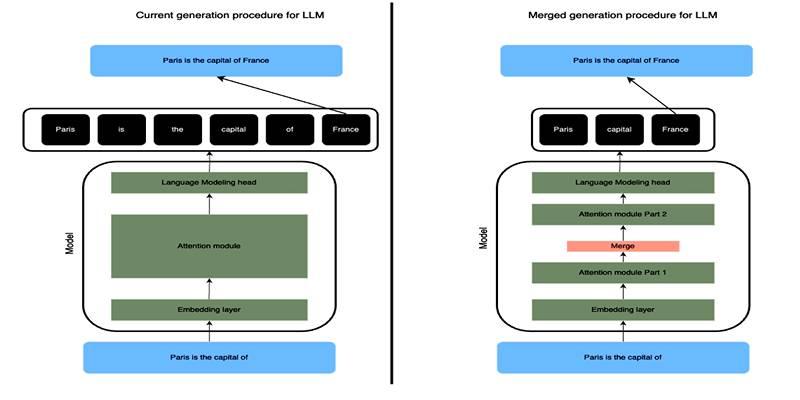

Learn how SLERP token merging trims long prompts, speeds LLM inference, and keeps output meaning stable and clean.

How to approach AI trends strategically, overcome FOMO, and turn artificial intelligence into a tool for growth and success.

Explore how Keras 3 simplifies AI/ML development with seamless integration across TensorFlow, JAX, and PyTorch for flexible, scalable modeling.

Craft advanced machine learning models with the Functional API and unlock the potential of flexible, graph-like structures.

How to avoid common pitfalls in data strategy and leverage actionable insights to drive real business transformation.

How neural networks revolutionize time-series data imputation, tackling challenges in missing data with advanced, adaptable strategies.

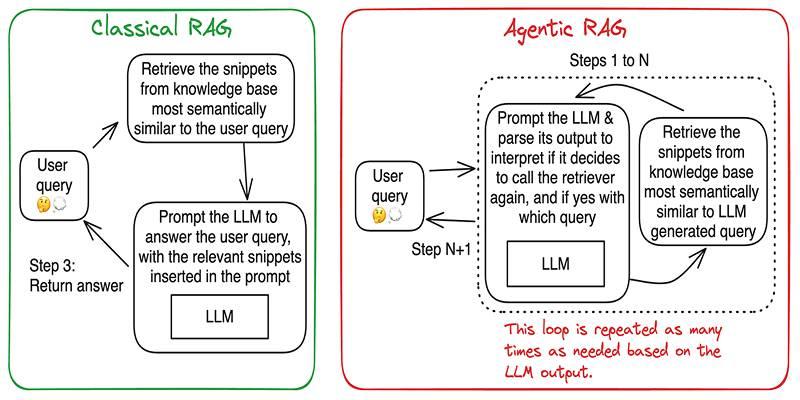

Build accurate, explainable answers by coordinating planner, retriever, writer, and checker agents with tight tool control.

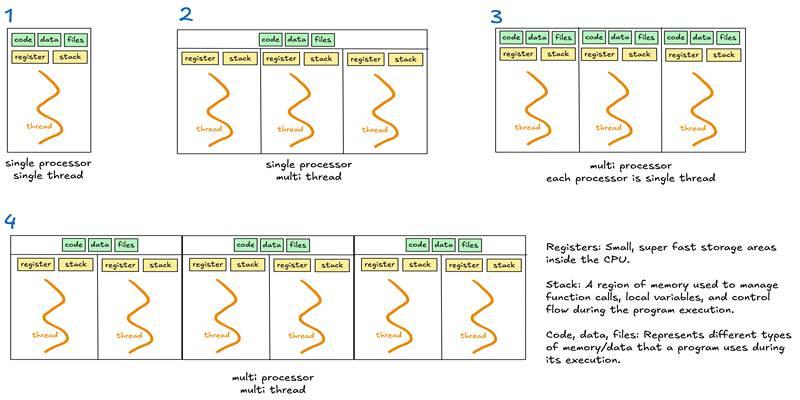

Learn when to use threads, processes, or asyncio to handle I/O waits, CPU tasks, and concurrency in real-world code.

Discover DeepSeek’s R1 training process in simple steps. Learn its methods, applications, and benefits in AI development