Simple, linear neural networks are well-suited for TensorFlow via the Sequential model. Only in view of more complex architectures, with several inputs, outputs, or path legs, do you require the Functional API. It provides a highly capable and flexible component for representing models in a graph representation, allowing for complex designs that are inaccessible to Sequential Models. This tutorial examines its fundamental ideas.

Suppose you are playing with LEGO bricks. Linear model: The Sequential model is similar to piling one brick on top of another in a straight or linear tower. It is straightforward, quick, and simple to comprehend. It works by building a foundation, then another, and another, and so on, until your tower is finished. This is the best answer to many simple constructions.

The Functional API, however, is as though the entire LEGO Technic set had fallen into your hands. You may stack bricks, but you may also connect them side by side, add branches that split off and reconnect, making very complicated, interconnected structures. You can make more than just a tower; you can create an elaborate car, crane, or even the Millennium Falcon.

The most important difference is that one has a graph-like derivation. Whereas the Sequential model takes in a single input and produces a single output during a linear sequence, the Functional API views a model as a directed acyclic graph (DAG). This gives you latitude to chart the stream of data through your network in nearly any way that you can conceive, provided that this does not run back on itself.

You should learn a little about the underlying mechanics of the Functional API. It comes down to specifying the data flow among tensors between layers.

Home needs to commence every model. In the Functional API, you begin by explicitly defining the type of object that serves as your input. Visualize this as the location where your data is, as an entrance point. You define the form of data that will be presented to the model. For example, if you are working with 28x28 pixel grayscale images, you would have an input with that particular shape. This Input object is an abstract tensor that contains the location of the real data to be fed to the model during training and inference.

After having an input, you begin to tie the layers together. How you do this is a magic of the Functional API. You invoke a layer over a tensor and obtain a new one as the result. Thus, it is known as Functional; you are functionalizing data (tensors) and applying functionalities (layers).

Here's the process:

Each time you repeat this, use the output of each layer as the input for the next layer. Every relationship that you have created is an edge in your graph.

Once you have decided the complete flow of all the information that you receive, and what you are going to produce, you must compile the whole of this in a Model object. You do this by stating which parts of the model should act as entry points (the Input tensors you defined earlier) and which are its exit points (which are the tensors provided by your final layers).

It is a trainable, inferable, and complete Model Object, just as a Sequential model; you can compile, train, and use it to make predictions. The distinction is that the underlying structure may be much more complex.

The complexity of the Functional API is added at a high price point. It provides a realm of possibilities for building more complex neural network architectures.

Numerous problems in the real world cannot be addressed with a single input and a single output.

Suppose you wish to predict the price of a house. It could be formatted data, such as the number of bedrooms and square footage, but could also be an image of the house accompanied by a text description. Using the Functional API, you should have a single model that takes on all of these types of data at once. The numerical data might be handled by one branch of the network, the image by another, and the text by another. One can then combine these branches to obtain a final prediction.

Take the example of an analysis of a news article. You can have it do two things simultaneously: classify the category of the article (e.g., "sports," "politics") and also assign the most relevant tags. The Functional API enables you to have two distinct output layers, one corresponding to category prediction and another to tag predictions.

Inter-model sharing is a strong feature that allows a single instance of a layer to be shared across multiple models. This enables the model to acquire a feature that is shared by numerous inputs or branches, which may be more efficient and lead to improved generalization.

e.g., in the context of comparing the similarity of two images with a model, you might have two input streams (one stream per image). Then you can feed the same set of convolutional layers (a common "encoder" to both pictures. The results of such a mutual encoder can be subsequently compared to find similarity. Due to the same set of layers being used, the model will learn to extract similar features in both images in a similar manner.

The beauty of the Functional API lies in the ability to create complex network configurations. You can design models with:

These are frequent in deep networks (such as ResNet), where we want the output of a layer summed back to the input. This facilitates the gradient flow process during ownership and enables the development of far more complex networks. With the Sequential model, this cannot be done.

The modules consist of several parallel chains of convolutional filters, which are then concatenated. This enables the network to learn features of the different scales concurrently.

The best thing you can do before learning to master the Functional API is to first understand the concepts behind it. The Sequential model is a very good place to get started, but the tool to use when you take this next step with more advanced deep learning activities is the Functional API. It offers the best flexibility in constructing models that are tailored to the complexities of your particular problem.

When you begin to imagine your models as graphs of interlinked layers, you can start designing solutions to a far broader set of tasks. The Functional API provides the ability and flexibility to make whatever you can think of happen with your own multiswitch arsenals, multiple things all at once, or with advanced state-of-the-art arsenals.

The Functional API is a fundamental part of the modern deep learning toolkit. It empowers developers and researchers to move beyond simple linear models and construct sophisticated architectures that can tackle complex, real-world challenges. By embracing the graph-like nature of model building, you can unlock new levels of performance and innovation in your machine learning projects.

How AI with multiple personalities enables systems to adapt behaviors across user roles and tasks

Effective AI governance ensures fairness and safety by defining clear thresholds, tracking performance, and fostering continuous improvement.

Explore the truth behind AI hallucination and how artificial intelligence generates believable but false information

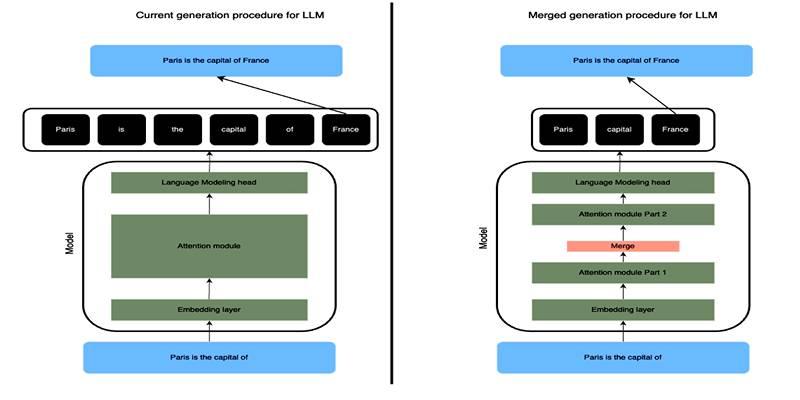

Learn how SLERP token merging trims long prompts, speeds LLM inference, and keeps output meaning stable and clean.

How to approach AI trends strategically, overcome FOMO, and turn artificial intelligence into a tool for growth and success.

Explore how Keras 3 simplifies AI/ML development with seamless integration across TensorFlow, JAX, and PyTorch for flexible, scalable modeling.

Craft advanced machine learning models with the Functional API and unlock the potential of flexible, graph-like structures.

How to avoid common pitfalls in data strategy and leverage actionable insights to drive real business transformation.

How neural networks revolutionize time-series data imputation, tackling challenges in missing data with advanced, adaptable strategies.

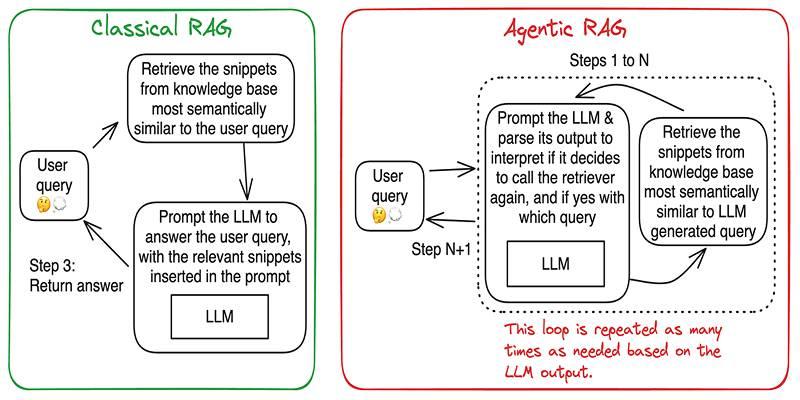

Build accurate, explainable answers by coordinating planner, retriever, writer, and checker agents with tight tool control.

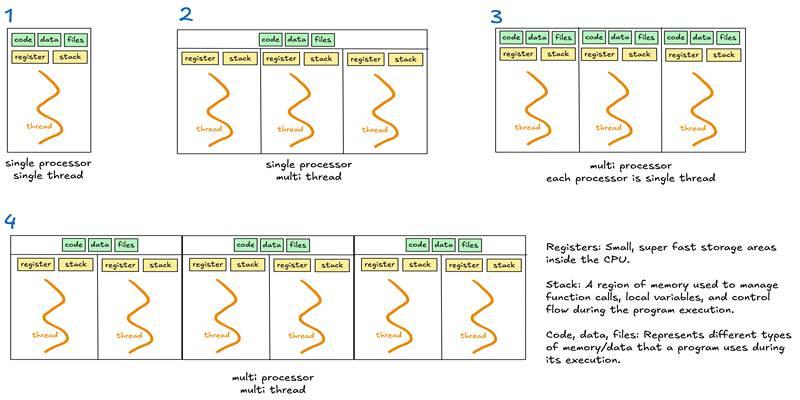

Learn when to use threads, processes, or asyncio to handle I/O waits, CPU tasks, and concurrency in real-world code.

Discover DeepSeek’s R1 training process in simple steps. Learn its methods, applications, and benefits in AI development